Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Compact AI Acceleration: Geniatech’s M.2 Module for Scalable Deep Learning

Blog Article

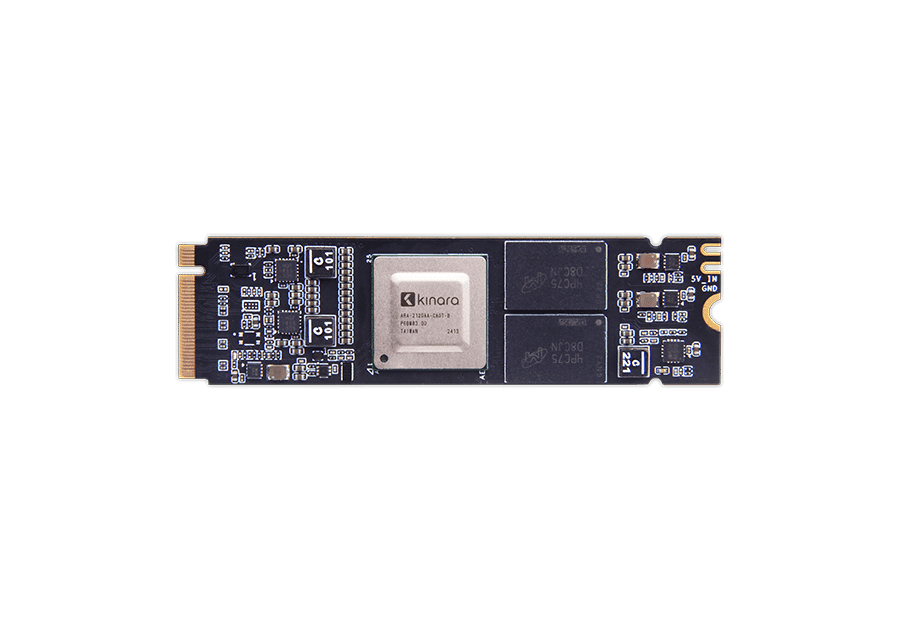

Seamless AI Integration with Geniatech’s Low-Power M.2 Accelerator Module

Synthetic intelligence (AI) remains to revolutionize how industries perform, specially at the edge, wherever rapid processing and real-time ideas are not just appealing but critical. The m.2 ai accelerator has surfaced as a compact however strong answer for addressing the needs of edge AI applications. Giving robust efficiency in just a little impact, this component is rapidly operating invention in from intelligent towns to industrial automation.

The Dependence on Real-Time Handling at the Edge

Edge AI connections the space between people, products, and the cloud by allowing real-time knowledge control wherever it's many needed. Whether powering autonomous cars, wise security cameras, or IoT detectors, decision-making at the side should happen in microseconds. Standard computing techniques have confronted issues in checking up on these demands.

Enter the M.2 AI Accelerator Module. By integrating high-performance device learning capabilities in to a small type factor, that computer is reshaping what real-time handling appears like. It offers the pace and effectiveness businesses need without depending solely on cloud infrastructures that will add latency and improve costs.

What Makes the M.2 AI Accelerator Component Stand Out?

• Compact Design

Among the standout functions with this AI accelerator module is its small M.2 variety factor. It fits quickly into a number of embedded techniques, machines, or side units without the necessity for extensive equipment modifications. This makes implementation easier and much more space-efficient than larger alternatives.

• High Throughput for Equipment Learning Tasks

Built with advanced neural network processing abilities, the element offers remarkable throughput for responsibilities like picture recognition, video analysis, and presentation processing. The structure ensures easy handling of complex ML versions in real-time.

• Energy Efficient

Energy consumption is just a major problem for side units, particularly those that perform in remote or power-sensitive environments. The element is improved for performance-per-watt while sustaining consistent and reliable workloads, making it suitable for battery-operated or low-power systems.

• Functional Applications

From healthcare and logistics to clever retail and manufacturing automation, the M.2 AI Accelerator Module is redefining opportunities across industries. As an example, it forces advanced video analytics for smart monitoring or helps predictive maintenance by studying sensor knowledge in industrial settings.

Why Side AI is Increasing Momentum

The rise of side AI is reinforced by growing information sizes and an increasing quantity of linked devices. Based on recent business figures, you will find around 14 billion IoT devices functioning globally, several predicted to surpass 25 million by 2030. With this specific change, old-fashioned cloud-dependent AI architectures experience bottlenecks like improved latency and solitude concerns.

Edge AI eliminates these difficulties by processing knowledge locally, providing near-instantaneous insights while safeguarding individual privacy. The M.2 AI Accelerator Module aligns completely with this tendency, enabling companies to control the entire possible of edge intelligence without reducing on operational efficiency.

Essential Statistics Showing its Impact

To comprehend the impact of such technologies, contemplate these highlights from recent market studies:

• Growth in Side AI Industry: The world wide edge AI hardware market is believed to develop at a element annual growth rate (CAGR) exceeding 20% by 2028. Units just like the M.2 AI Accelerator Element are pivotal for driving that growth.

• Efficiency Benchmarks: Labs screening AI accelerator modules in real-world cases have demonstrated up to a 40% improvement in real-time inferencing workloads compared to main-stream edge processors.

• Usage Across Industries: About 50% of enterprises deploying IoT products are anticipated to incorporate edge AI programs by 2025 to boost detailed efficiency.

With such figures underscoring its relevance, the M.2 AI Accelerator Component is apparently not just a software but a game-changer in the change to smarter, quicker, and more scalable edge AI solutions.

Pioneering AI at the Edge

The M.2 AI Accelerator Element represents more than simply still another piece of electronics; it's an enabler of next-gen innovation. Agencies adopting this computer can stay in front of the curve in deploying agile, real-time AI programs completely enhanced for side environments. Small however strong, oahu is the great encapsulation of development in the AI revolution.

From their capability to process device learning types on the travel to their unparalleled mobility and energy effectiveness, that module is indicating that edge AI is not a remote dream. It's occurring today, and with methods such as this, it's easier than actually to create better, quicker AI closer to where in fact the activity happens. Report this page